The history of synthetic-aperture radar begins in 1951, with the invention of the technology by mathematician Carl A. Wiley, and its development in the following decade. Initially developed for military use, the technology has since been applied in the field of planetary science.

Invention

Carl A. Wiley,[1] a mathematician at Goodyear Aircraft Company in Litchfield Park, Arizona, invented synthetic-aperture radar in June 1951 while working on a correlation guidance system for the Atlas ICBM program.[2] In early 1952, Wiley, together with Fred Heisley and Bill Welty, constructed a concept validation system known as DOUSER ("Doppler Unbeamed Search Radar"). During the 1950s and 1960s, Goodyear Aircraft (later Goodyear Aerospace) introduced numerous advancements in SAR technology, many with the help from Don Beckerleg.[3]

Independently of Wiley's work, experimental trials in early 1952 by Sherwin and others at the University of Illinois' Control Systems Laboratory showed results that they pointed out "could provide the basis for radar systems with greatly improved angular resolution" and might even lead to systems capable of focusing at all ranges simultaneously.[4]

In both of those programs, processing of the radar returns was done by electrical-circuit filtering methods. In essence, signal strength in isolated discrete bands of Doppler frequency defined image intensities that were displayed at matching angular positions within proper range locations. When only the central (zero-Doppler band) portion of the return signals was used, the effect was as if only that central part of the beam existed. That led to the term Doppler Beam Sharpening. Displaying returns from several adjacent non-zero Doppler frequency bands accomplished further "beam-subdividing" (sometimes called "unfocused radar", though it could have been considered "semi-focused"). Wiley's patent, applied for in 1954, still proposed similar processing. The bulkiness of the circuitry then available limited the extent to which those schemes might further improve resolution.

The principle was included in a memorandum[5] authored by Walter Hausz of General Electric that was part of the then-secret report of a 1952 Dept. of Defense summer study conference called TEOTA ("The Eyes of the Army"),[6] which sought to identify new techniques useful for military reconnaissance and technical gathering of intelligence. A follow-on summer program in 1953 at the University of Michigan, called Project Wolverine, identified several of the TEOTA subjects, including Doppler-assisted sub-beamwidth resolution, as research efforts to be sponsored by the Department of Defense (DoD) at various academic and industrial research laboratories. In that same year, the Illinois group produced a "strip-map" image exhibiting a considerable amount of sub-beamwidth resolution.

Project Michigan

Objectives

A more advanced focused-radar project was among several remote sensing schemes assigned in 1953 to Project Michigan, a tri-service-sponsored (Army, Navy, Air Force) program at the University of Michigan's Willow Run Research Center (WRRC), that program being administered by the Army Signal Corps. Initially called the side-looking radar project, it was carried out by a group first known as the Radar Laboratory and later as the Radar and Optics Laboratory. It proposed to take into account, not just the short-term existence of several particular Doppler shifts, but the entire history of the steadily varying shifts from each target as the latter crossed the beam. An early analysis by Dr. Louis J. Cutrona, Weston E. Vivian, and Emmett N. Leith of that group showed that such a fully focused system should yield, at all ranges, a resolution equal to the width (or, by some criteria, the half-width) of the real antenna carried on the radar aircraft and continually pointed broadside to the aircraft's path.[7]

Technical and scientific basis

The required data processing amounted to calculating cross-correlations of the received signals with samples of the forms of signals to be expected from unit-amplitude sources at the various ranges. At that time, even large digital computers had capabilities somewhat near the levels of today's four-function handheld calculators, hence were nowhere near able to do such a huge amount of computation. Instead, the device for doing the correlation computations was to be an optical correlator.

It was proposed that signals received by the traveling antenna and coherently detected be displayed as a single range-trace line across the diameter of the face of a cathode-ray tube, the line's successive forms being recorded as images projected onto a film traveling perpendicular to the length of that line. The information on the developed film was to be subsequently processed in the laboratory on equipment still to be devised as a principal task of the project. In the initial processor proposal, an arrangement of lenses was expected to multiply the recorded signals point-by-point with the known signal forms by passing light successively through both the signal film and another film containing the known signal pattern. The subsequent summation, or integration, step of the correlation was to be done by converging appropriate sets of multiplication products by the focusing action of one or more spherical and cylindrical lenses. The processor was to be, in effect, an optical analog computer performing large-scale scalar arithmetic calculations in many channels (with many light "rays") at once. Ultimately, two such devices would be needed, their outputs to be combined as quadrature components of the complete solution.

A desire to keep the equipment small had led to recording the reference pattern on 35 mm film. Trials promptly showed that the patterns on the film were so fine as to show pronounced diffraction effects that prevented sharp final focusing.[8]

That led Leith, a physicist who was devising the correlator, to recognize that those effects in themselves could, by natural processes, perform a significant part of the needed processing, since along-track strips of the recording operated like diametrical slices of a series of circular optical zone plates. Any such plate performs somewhat like a lens, each plate having a specific focal length for any given wavelength. The recording that had been considered as scalar became recognized as pairs of opposite-sign vector ones of many spatial frequencies plus a zero-frequency "bias" quantity. The needed correlation summation changed from a pair of scalar ones to a single vector one.

Each zone plate strip has two equal but oppositely signed focal lengths, one real, where a beam through it converges to a focus, and one virtual, where another beam appears to have diverged from, beyond the other face of the zone plate. The zero-frequency (DC bias) component has no focal point, but overlays both the converging and diverging beams. The key to obtaining, from the converging wave component, focused images that are not overlaid with unwanted haze from the other two is to block the latter, allowing only the wanted beam to pass through a properly positioned frequency-band selecting aperture.

Each radar range yields a zone plate strip with a focal length proportional to that range. This fact became a principal complication in the design of optical processors. Consequently, technical journals of the time contain a large volume of material devoted to ways for coping with the variation of focus with range.

For that major change in approach, the light used had to be both monochromatic and coherent, properties that were already a requirement on the radar radiation. Lasers also then being in the future, the best then-available approximation to a coherent light source was the output of a mercury vapor lamp, passed through a color filter that was matched to the lamp spectrum's green band, and then concentrated as well as possible onto a very small beam-limiting aperture. While the resulting amount of light was so weak that very long exposure times had to be used, a workable optical correlator was assembled in time to be used when appropriate data became available.

Although creating that radar was a more straightforward task based on already-known techniques, that work did demand the achievement of signal linearity and frequency stability that were at the extreme state of the art. An adequate instrument was designed and built by the Radar Laboratory and was installed in a C-46 (Curtiss Commando) aircraft. Because the aircraft was bailed to WRRC by the U. S. Army and was flown and maintained by WRRC's own pilots and ground personnel, it was available for many flights at times matching the Radar Laboratory's needs, a feature important for allowing frequent re-testing and "debugging" of the continually developing complex equipment. By contrast, the Illinois group had used a C-46 belonging to the Air Force and flown by AF pilots only by pre-arrangement, resulting, in the eyes of those researchers, in limitation to a less-than-desirable frequency of flight tests of their equipment, hence a low bandwidth of feedback from tests. (Later work with newer Convair aircraft continued the Michigan group's local control of flight schedules.)

Results

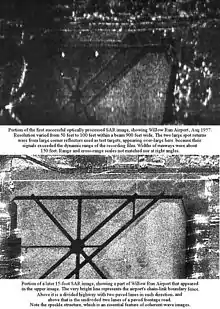

Michigan's chosen 5-foot (1.5 m)-wide World War II-surplus antenna was theoretically capable of 5-foot (1.5 m) resolution, but data from only 10% of the beamwidth was used at first, the goal at that time being to demonstrate 50-foot (15 m) resolution. It was understood that finer resolution would require the added development of means for sensing departures of the aircraft from an ideal heading and flight path, and for using that information for making needed corrections to the antenna pointing and to the received signals before processing. After numerous trials in which even small atmospheric turbulence kept the aircraft from flying straight and level enough for good 50-foot (15 m) data, one pre-dawn flight in August 1957[9] yielded a map-like image of the Willow Run Airport area which did demonstrate 50-foot (15 m) resolution in some parts of the image, whereas the illuminated beam width there was 900 feet (270 m). Although the program had been considered for termination by DoD due to what had seemed to be a lack of results, that first success ensured further funding to continue development leading to solutions to those recognized needs.

Public acknowledgement

The SAR principle was first acknowledged publicly via an April 1960 press release about the U. S. Army experimental AN/UPD-1 system, which consisted of an airborne element made by Texas Instruments and installed in a Beech L-23D aircraft and a mobile ground data-processing station made by WRRC and installed in a military van. At the time, the nature of the data processor was not revealed. A technical article in the journal of the IRE (Institute of Radio Engineers) Professional Group on Military Electronics in February 1961[10] described the SAR principle and both the C-46 and AN/UPD-1 versions, but did not tell how the data were processed, nor that the UPD-1's maximum resolution capability was about 50 feet (15 m). However, the June 1960 issue of the IRE Professional Group on Information Theory had contained a long article[11] on "Optical Data Processing and Filtering Systems" by members of the Michigan group. Although it did not refer to the use of those techniques for radar, readers of both journals could quite easily understand the existence of a connection between articles sharing some authors.

Vietnam

An operational system to be carried in a reconnaissance version of the F-4 "Phantom" aircraft was quickly devised and was used briefly in Vietnam, where it failed to favorably impress its users, due to the combination of its low resolution (similar to the UPD-1's), the speckly nature of its coherent-wave images (similar to the speckliness of laser images), and the poorly understood dissimilarity of its range/cross-range images from the angle/angle optical ones familiar to military photo interpreters. The lessons it provided were well learned by subsequent researchers, operational system designers, image-interpreter trainers, and the DoD sponsors of further development and acquisition.

Subsequent improvement

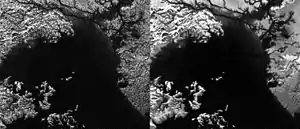

In subsequent work the technique's latent capability was eventually achieved. That work, depending on advanced radar circuit designs and precision sensing of departures from ideal straight flight, along with more sophisticated optical processors using laser light sources and specially designed very large lenses made from remarkably clear glass, allowed the Michigan group to advance system resolution, at about 5-year intervals, first to 15 feet (4.6 m), then 5 feet (1.5 m), and, by the mid-1970s, to 1 foot (the latter only over very short range intervals while processing was still being done optically). The latter levels and the associated very wide dynamic range proved suitable for identifying many objects of military concern as well as soil, water, vegetation, and ice features being studied by a variety of environmental researchers having security clearances allowing them access to what was then classified imagery. Similarly improved operational systems soon followed each of those finer-resolution steps.

Even the 5-foot (1.5 m) resolution stage had over-taxed the ability of cathode-ray tubes (limited to about 2000 distinguishable items across the screen diameter) to deliver fine enough details to signal films while still covering wide range swaths, and taxed the optical processing systems in similar ways. However, at about the same time, digital computers finally became capable of doing the processing without similar limitation, and the consequent presentation of the images on cathode ray tube monitors instead of film allowed for better control over tonal reproduction and for more convenient image mensuration.

Achievement of the finest resolutions at long ranges was aided by adding the capability to swing a larger airborne antenna so as to more strongly illuminate a limited target area continually while collecting data over several degrees of aspect, removing the previous limitation of resolution to the antenna width. This was referred to as the spotlight mode, which no longer produced continuous-swath images but, instead, images of isolated patches of terrain.

Out-of-the-atmosphere platform

It was understood very early in SAR development that the extremely smooth orbital path of an out-of-the-atmosphere platform made it ideally suited to SAR operation. Early experience with artificial earth satellites had also demonstrated that the Doppler frequency shifts of signals traveling through the ionosphere and atmosphere were stable enough to permit very fine resolution to be achievable even at ranges of hundreds of kilometers.[12] The first spaceborne SAR images of Earth were demonstrated by a project now referred to as Quill (declassified in 2012).[13]

Digitisation

After the initial work began, several of the capabilities for creating useful classified systems did not exist for another two decades. That seemingly slow rate of advances was often paced by the progress of other inventions, such as the laser, the digital computer, circuit miniaturization, and compact data storage. Once the laser appeared, optical data processing became a fast process because it provided many parallel analog channels, but devising optical chains suited to matching signal focal lengths to ranges proceeded by many stages and turned out to call for some novel optical components. Since the process depended on diffraction of light waves, it required anti-vibration mountings, clean rooms, and highly trained operators. Even at its best, its use of CRTs and film for data storage placed limits on the range depth of images.

At several stages, attaining the frequently over-optimistic expectations for digital computation equipment proved to take far longer than anticipated. For example, the SEASAT system was ready to orbit before its digital processor became available, so a quickly assembled optical recording and processing scheme had to be used to obtain timely confirmation of system operation. In 1978, the first digital SAR processor was developed by the Canadian aerospace company MacDonald Dettwiler (MDA).[14] When its digital processor was finally completed and used, the digital equipment of that time took many hours to create one swath of image from each run of a few seconds of data.[15] Still, while that was a step down in speed, it was a step up in image quality. Modern methods now provide both high speed and high quality.

Data collection

Highly accurate data can be collected by aircraft overflying the terrain in question. In the 1980s, as a prototype for instruments to be flown on the NASA Space Shuttles, NASA operated a synthetic aperture radar on a NASA Convair 990. In 1986, this plane caught fire on takeoff. In 1988, NASA rebuilt a C, L, and P-band SAR to fly on the NASA DC-8 aircraft. Called AIRSAR, it flew missions at sites around the world until 2004. Another such aircraft, the Convair 580, was flown by the Canada Center for Remote Sensing until about 1996 when it was handed over to Environment Canada due to budgetary reasons. Most land-surveying applications are now carried out by satellite observation. Satellites such as ERS-1/2, JERS-1, Envisat ASAR, and RADARSAT-1 were launched explicitly to carry out this sort of observation. Their capabilities differ, particularly in their support for interferometry, but all have collected tremendous amounts of valuable data. The Space Shuttle also carried synthetic aperture radar equipment during the SIR-A and SIR-B missions during the 1980s, the Shuttle Radar Laboratory (SRL) missions in 1994 and the Shuttle Radar Topography Mission in 2000.

The Venera 15 and Venera 16 followed later by the Magellan space probe mapped the surface of Venus over several years using synthetic aperture radar.

Synthetic aperture radar was first used by NASA on JPL's Seasat oceanographic satellite in 1978 (this mission also carried an altimeter and a scatterometer); it was later developed more extensively on the Spaceborne Imaging Radar (SIR) missions on the space shuttle in 1981, 1984 and 1994. The Cassini mission to Saturn used SAR to map the surface of the planet's major moon Titan, whose surface is partly hidden from direct optical inspection by atmospheric haze. The SHARAD sounding radar on the Mars Reconnaissance Orbiter and MARSIS instrument on Mars Express have observed bedrock beneath the surface of the Mars polar ice and also indicated the likelihood of substantial water ice in the Martian middle latitudes. The Lunar Reconnaissance Orbiter, launched in 2009, carries a SAR instrument called Mini-RF, which was designed largely to look for water ice deposits on the poles of the Moon.

The Mineseeker Project is designing a system for determining whether regions contain landmines based on a blimp carrying ultra-wideband synthetic aperture radar. Initial trials show promise; the radar is able to detect even buried plastic mines.

The National Reconnaissance Office maintains a fleet of (now declassified) synthetic aperture radar satellites commonly designated as Lacrosse or Onyx.

In February 2009, the Sentinel R1 surveillance aircraft entered service in the RAF, equipped with the SAR-based Airborne Stand-Off Radar (ASTOR) system.

The German Armed Forces' (Bundeswehr) military SAR-Lupe reconnaissance satellite system has been fully operational since 22 July 2008.

As of January 2021, multiple commercial companies have started launching constellations of satellites for collecting SAR imagery of Earth.[16]

Data distribution

The Alaska Satellite Facility provides production, archiving and distribution to the scientific community of SAR data products and tools from active and past missions, including the June 2013 release of newly processed, 35-year-old Seasat SAR imagery.

The Center for Southeastern Tropical Advanced Remote Sensing (CSTARS) downlinks and processes SAR data (as well as other data) from a variety of satellites and supports the University of Miami Rosenstiel School of Marine and Atmospheric Science. CSTARS also supports disaster relief operations, oceanographic and meteorological research, and port and maritime security research projects.

See also

References

- ↑ "In Memory of Carl A. Wiley", A. W. Love, IEEE Antennas and Propagation Society Newsletter, pp 17–18, June 1985.

- ↑ "Synthetic Aperture Radars: A Paradigm for Technology Evolution", C. A. Wiley, IEEE Transactions on Aerospace and Electronic Systems, v. AES-21, n. 3, pp 440–443, May 1985

- ↑ Gart, Jason H. "Electronics and Aerospace Industry in Cold War Arizona, 1945–1968: Motorola, Hughes Aircraft, Goodyear Aircraft." Phd diss., Arizona State University, 2006.

- ↑ "Some Early Developments in Synthetic Aperture Radar Systems", C. W. Sherwin, J. P. Ruina, and R. D. Rawcliffe, IRE Transactions on Military Electronics, April 1962, pp. 111–115.

- ↑ This memo was one of about 20 published as a volume subsidiary to the following reference. No unclassified copy has yet been located. Hopefully, some reader of this article may come across a still existing one.

- ↑ "Problems of Battlefield Surveillance", Report of Project TEOTA (The Eyes of the Army), 1 May 1953, Office of the Chief Signal Officer. Defense Technical Information Center (Document AD 32532)

- ↑ "A Doppler Technique for Obtaining Very Fine Angular Resolution from a Side-Looking Airborne Radar" Report of Project Michigan No. 2144-5-T, The University of Michigan, Willow Run Research Center, July 1954. (No declassified copy of this historic originally confidential report has yet been located.)

- ↑ "A short history of the Optics Group of the Willow Run Laboratories", Emmett N. Leith, in Trends in Optics: Research, Development, and Applications (book), Anna Consortini, Academic Press, San Diego: 1996.

- ↑ "High-Resolution Radar Achievements During Preliminary Flight Tests", W. A. Blikken and G.O. Hall, Institute of Science and Technology, Univ. of Michigan, 1 September 1957. Defense Technical Information Center (Document AD148507)

- ↑ "A High-Resolution Radar Combat-Intelligence System", L. J. Cutrona, W. E. Vivian, E. N. Leith, and G. O Hall; IRE Transactions on Military Electronics, April 1961, pp 127–131

- ↑ "Optical Data Processing and Filtering Systems", L. J. Cutrona, E. N. Leith, C. J. Palermo, and L. J. Porcello; IRE Transactions on Information Theory, June 1960, pp 386–400.

- ↑ An experimental study of rapid phase fluctuations induced along a satellite to earth propagation path, Porcello, L.J., Univ. of Michigan, April 1964

- ↑ Robert L. Butterworth "Quill: The First Imaging Radar Satellite"

- ↑ "Observation of the earth and its environment: survey of missions and sensors", Herbert J. Kramer

- ↑ "Principles of Synthetic Aperture Radar", S. W. McCandless and C. R. Jackson, Chapter 1 of "SAR Marine Users Manual", NOAA, 2004, p.11.

- ↑ Debra, Werner (January 4, 2021). "Spacety shares first images from small C-band SAR satellite". Space News.

Finland's Iceye operates five X-band SAR satellites. San Francisco-based Capella Space began releasing imagery in October from its first operational satellite, Sequoia, which also operates in X-band. Spacety plans to build, launch and operate a constellation of 56 small SAR satellites.