In information theory, dual total correlation,[1] information rate,[2] excess entropy,[3][4] or binding information[5] is one of several known non-negative generalizations of mutual information. While total correlation is bounded by the sum entropies of the n elements, the dual total correlation is bounded by the joint-entropy of the n elements. Although well behaved, dual total correlation has received much less attention than the total correlation. A measure known as "TSE-complexity" defines a continuum between the total correlation and dual total correlation.[3]

Definition

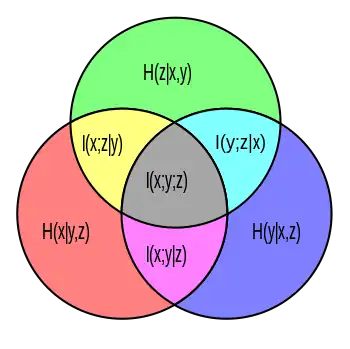

For a set of n random variables , the dual total correlation is given by

where is the joint entropy of the variable set and is the conditional entropy of variable , given the rest.

Normalized

The dual total correlation normalized between [0,1] is simply the dual total correlation divided by its maximum value ,

Relationship with Total Correlation

Dual total correlation is non-negative and bounded above by the joint entropy .

Secondly, Dual total correlation has a close relationship with total correlation, , and can be written in terms of differences between the total correlation of the whole, and all subsets of size :[6]

where and

Furthermore, the total correlation and dual total correlation are related by the following bounds:

Finally, the difference between the total correlation and the dual total correlation defines a novel measure of higher-order information-sharing: the O-information:[7]

- .

The O-information (first introduced as the "enigmatic information" by James and Crutchfield[8] is a signed measure that quantifies the extent to which the information in a multivariate random variable is dominated by synergistic interactions (in which case ) or redundant interactions (in which case .

History

Han (1978) originally defined the dual total correlation as,

However Abdallah and Plumbley (2010) showed its equivalence to the easier-to-understand form of the joint entropy minus the sum of conditional entropies via the following:

See also

Bibliography

Footnotes

- ↑ Han, Te Sun (1978). "Nonnegative entropy measures of multivariate symmetric correlations". Information and Control. 36 (2): 133–156. doi:10.1016/S0019-9958(78)90275-9.

- ↑ Dubnov, Shlomo (2006). "Spectral Anticipations". Computer Music Journal. 30 (2): 63–83. doi:10.1162/comj.2006.30.2.63. S2CID 2202704.

- 1 2 Nihat Ay, E. Olbrich, N. Bertschinger (2001). A unifying framework for complexity measures of finite systems. European Conference on Complex Systems. pdf.

- ↑ Olbrich, E.; Bertschinger, N.; Ay, N.; Jost, J. (2008). "How should complexity scale with system size?". The European Physical Journal B. 63 (3): 407–415. Bibcode:2008EPJB...63..407O. doi:10.1140/epjb/e2008-00134-9. S2CID 120391127.

- ↑ Abdallah, Samer A.; Plumbley, Mark D. (2010). "A measure of statistical complexity based on predictive information". arXiv:1012.1890v1 [math.ST].

- ↑ Varley, Thomas F.; Pope, Maria; Faskowitz, Joshua; Sporns, Olaf (24 April 2023). "Multivariate information theory uncovers synergistic subsystems of the human cerebral cortex". Communications Biology. 6 (1). doi:10.1038/s42003-023-04843-w. PMC 10125999.

- ↑ Rosas, Fernando E.; Mediano, Pedro A. M.; Gastpar, Michael; Jensen, Henrik J. (13 September 2019). "Quantifying high-order interdependencies via multivariate extensions of the mutual information". Physical Review E. 100 (3). arXiv:1902.11239. doi:10.1103/PhysRevE.100.032305.

- ↑ James, Ryan G.; Ellison, Christopher J.; Crutchfield, James P. (1 September 2011). "Anatomy of a bit: Information in a time series observation". Chaos: An Interdisciplinary Journal of Nonlinear Science. 21 (3). arXiv:1105.2988. doi:10.1063/1.3637494.

References

- Fujishige, Satoru (1978). "Polymatroidal dependence structure of a set of random variables". Information and Control. 39: 55–72. doi:10.1016/S0019-9958(78)91063-X.

- Varley, Thomas; Pope, Maria; Faskowitz, Joshua; Sporns, Olaf (2023). "Multivariate information theory uncovers synergistic subsystems of the human cerebral cortex". Nature Communications Biology. doi:10.1038/s42003-023-04843-w. PMC 10125999.